GraphQL is awesome for clients.

GraphQL offers a lot of power and flexibility to the API consumer. For example, the following query will fetch a list of users along with their id, email, and group:

{

users {

id

email

group {

id

name

}

}

}

The API consumer loves this because:

- They get to fetch only the fields they actually use.

- Future versions of the API that add new fields will not change the behaviour of this request.

- They can choose which associated data to load along with the user (in this case group). There is no need to do a separate API call to fetch the group for each user, which would create an N+1 querying issue.

It’s definitely worth checking out the GraphQL website if you’re still not persuaded. There’s a lot to like.

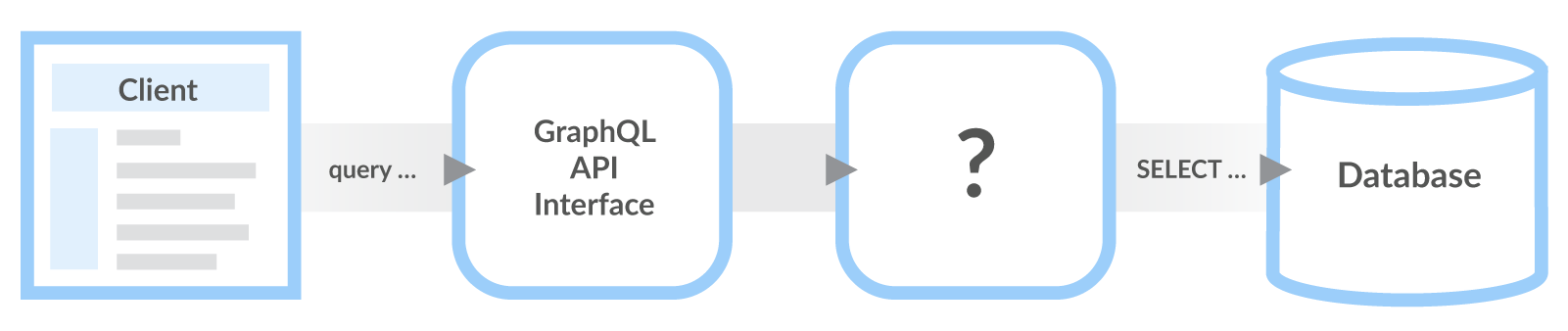

Scalable server implementations are tricky

Let’s think about how we implement the users with groups example on the server. The standard way to implement GraphQL servers is to describe the API schema and then map each type to a resolver. This example needs a resolveUsers function and a resolveGroupById function. If the data is stored in a relational database, then groups will not be loaded unless they are actually used by the query. This means that the API call is very efficient when groups are not requested, but when groups are included there is one call to resolveGroupById for every user fetched. The SELECT N+1 issue still exists, it’s just been moved from the client to the server. That’s an improvement, but it doesn’t eliminate the problem.

Is SELECT N+1 even a problem?

It depends. In some cases, it might not cause problems but it’s not the best practice, and things can quickly get out of hand as query complexity increases. N+1 can turn into (N * M * L) + 1

Database perspective

With our naive implementation, the database will see calls like this (all in separate round trips):

SELECT … FROM users LIMIT $page_size

SELECT … FROM groups WHERE id = $group_id

SELECT … FROM groups WHERE id = $group_id

SELECT … FROM groups WHERE id = $group_id

Now let’s say that the requirements of our application demand that we scale beyond these restrictions. We can’t afford to use this naive implementation. First, we’ll look at how this problem is already solved in another API framework.

The OData solution

If this were a normal REST API, there would be an endpoint that looks like this:

GET /api/users_with_groups

and it would generate this SQL:

SELECT … FROM users\LEFT JOIN groups ON groups.id = users.group_id

LIMIT $page_sizeThis feels natural for someone used to working with relational databases, but it’s a bit difficult to reconcile with the flexible nature of GraphQL. Conceivably, it is possible to inspect the request, find out which tables need to be joined together, and generate an SQL query that selects the exact data required to fulfill that request. In fact, there is another API protocol, named OData, whose primary implementation does precisely this. Typically, the request would look something like:

GET /api/odata/users?$expand=groupSince OData is mostly used in the .NET ecosystem, if the API interface looks like this there’s a pretty good chance that the OData query is being translated into an IQueryable and then to a single SQL SELECT statement, with the necessary JOINs. My initial intuition was that this is the exact, ideal behaviour, but experience tells me otherwise. Of course, OData is just an API protocol. There’s no reason that OData must use dynamic joins but from my experience, every one does because that’s how the Microsoft library works.

Why the OData solution is not effective

The approach of essentially allowing API consumers to build their own SQL queries (with limitations) is riddled with problems:

- It gets harder to control exactly what the SQL statements are going to look like and avoid malicious queries that kill the server.

- Usually, page sizes and maximum entities in the $expand clause are the only way to limit query complexity.

- SQL query plan is less likely to be cached because there are more unique queries.

- Application layer caching is harder to implement and less effective.

- Transitioning to a NoSQL database becomes very difficult because the relational database query interface has leaked through the API.

In short: consumers get too much control over the generated SQL.

An alternative, GraphQL-friendly solution

Rather than trying to “fix” our generated SQL, we can go in the opposite direction. Instead of building complex queries with a lot of joins we’ll stick to very simple queries like these:

-- Get one thing by ID

SELECT … FROM things WHERE id = $id

-- Get associated things

SELECT … FROM child_things WHERE parent_id = $thing_id LIMIT $page_size

-- Get a page of things

SELECT … FROM things WHERE created > $min_date ORDER BY created LIMIT $page_size

JOINs are not forbidden, they just need to be static. For example, things could actually be an aggregate that requires joining multiple tables together. The only dynamic parts are the actual variables ($id, $page_size, etc), and in some cases the contents of the WHERE and ORDER BY clauses to accommodate things like user-controlled sorting.

The benefit of keeping data fetch operations nice and simple is that there are a lot of optimization opportunities. Even though there are still N+1 calls to resolver functions, the database doesn’t need to be hit nearly as frequently.

Optimizations

Memoization

In Node.js this can be accomplished with the library dataloader. Memoization is a type of caching where a function’s return value is cached for a given set of inputs. This means that if there are many calls to resolveGroupById, it will only execute once per unique group ID and then reuse the value. The cached value only needs to live for the duration of the request (or one event loop iteration in JS) in order to be useful. In some use cases, this doesn’t help at all but in others, it massively reduces the number of database calls. Imagine loading 100 users with their group, but the only groups are “admins” and “users”. There would be one query to load the users and a maximum of two queries to load the groups because there is a lot of repetition.

Batching

This can also be accomplished with dataloader. In an N+1 database query situation, the N queries may be batchable. Instead of calling resolveGroupById once per group, call resolveManyGroupsById once with the list of unique IDs. The result could be many SELECT statements in a single round trip. It could also generate a single SELECT:

SELECT … FROM groups WHERE id IN ($id_1, $id_2, …)Database caching

A simpler database interface, where data is only fetched by ID or listed with a simple filter, means fewer unique query plans. That also means query plans are more likely to be cached and less RAM is required to cache actual data in memory. Not all databases work the same, but in general, in-database caching features work better with fewer unique queries.

Application layer caching

This refers to a cache layer that sits somewhere in between the web API and the actual database. It probably involves storing data in in-process memory, or in an external cache database like Redis or Memcached. With predictable queries, it is easier to define aggregates that can be fetched only by ID. Since there are likely a ton of GetThingById calls, all that’s needed is a key/value store, as long as there is a reasonable way to invalidate the cache when needed. Ideally, all writes are encapsulated by the API so that InvalidateThingById can be called whenever something changes.

Wrapping up

It turns out that the ostensibly naive approach of calling many resolvers for a single request actually scales pretty well, as long as you are willing to invest some effort into the data access layer that sits between the resolver functions and the database itself. The alternative solution of generating complex, dynamic SQL actually ends up putting complexity in the wrong place and creates more problems for an application that needs to scale well. The best part about this conclusion is that it makes total sense to start out with the simplest possible implementation and then add in the optimizations only if, or when, they are needed, without undoing any previous work.