In 2016, Sendwithus hosted a recurring event at our San Francisco HQ – The Email Power Hour. We invited local email aficionados and nerds alike to join us for some snacks and drinks while geeking out on email. One of these events focused on the topic of A/B testing.

After a bit of chatting, snacking, and sipping, we settled in for an hour of email discussion. Alex briefly covered the basics of A/B testing before diving into three awesome case studies.

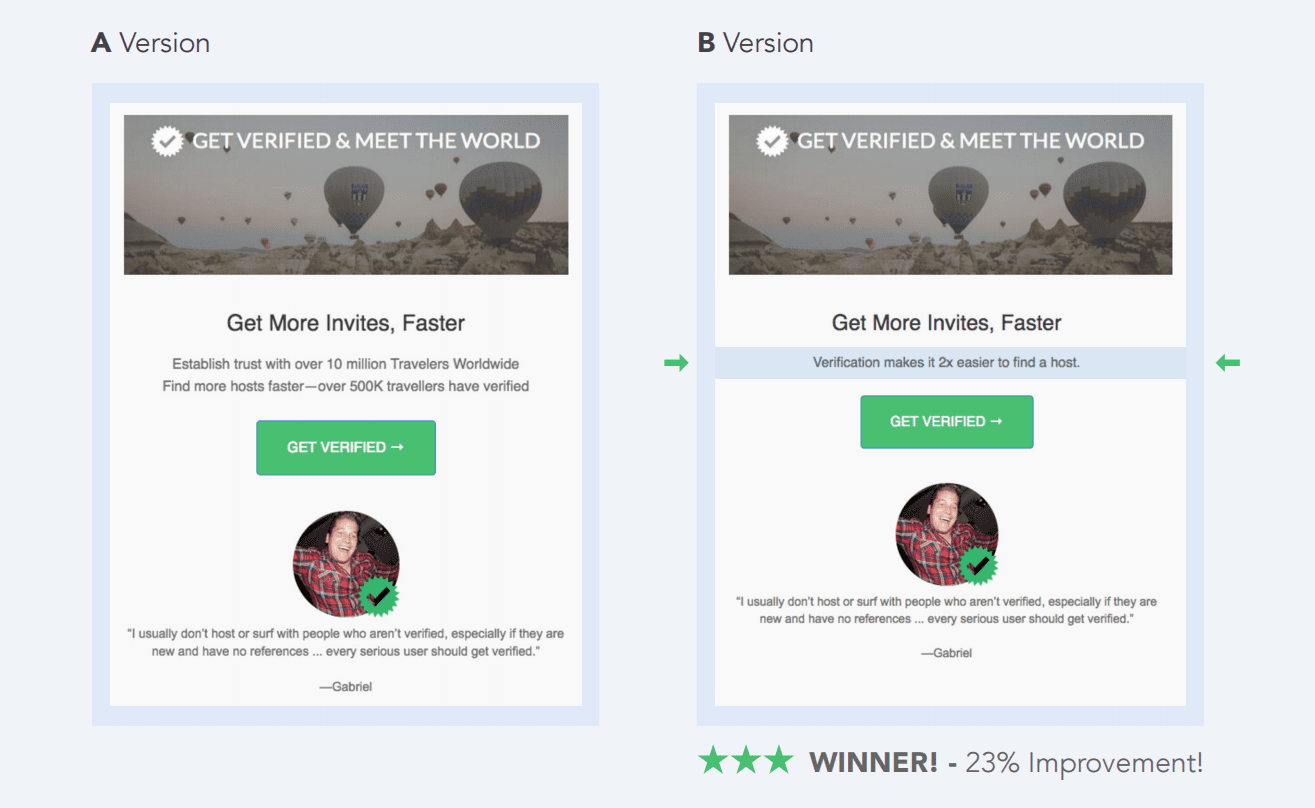

Our friend Alli Shea, head of Growth of Marketing over at Couchsurfing, often uses A/B testing in email as a low-friction, low-dependency avenue to optimize emails, of course, but also to test product messaging and imagery. Email test results can then be used to work with product and dev teams on future product improvements and communications, like in-app and website messaging.

For anyone unfamiliar with Couchsurfing, they host a network of over 4 million people from around the world who are willing to open their lives and homes (free of charge) to travelers bouncing around the globe. Alli recently ran a copy test on the welcome email series to hone the product messaging around surfer verification. While a free service at its core, Couchsurfing offers a verification option that ensures an ad-free website/app experience, as well as improving the likelihood that a surfer will be welcomed by a host.

Though a small text variation, Alli tested the messaging strategy for verification:

A) “Everyone else is doing it.”

B) “What’s in it for me?”

Option B resulted in a 23% better send-to-convert rate over Option A. The strategy promoting a better host acceptance rate was then passed along to the verification landing page. Next up, Alli said she plans to test other elements of the email, such as swapping out the balloon graphic and highlighting different surfers within the email, to fine tune the best combination and continue to improve the onboarding series.

Through this Power Hour discussion, we reviewed our first takeaway of the evening: document your results. Be sure to record variant details and results so that you can make actionable recommendations and don’t have to duplicate tests in the future.

Next, we moved on to talk about a couple of small-batch A/B tests Justin Khoo of Email on Acid ran for two of his own sites, Fresh Inbox and Campaign Workhub.

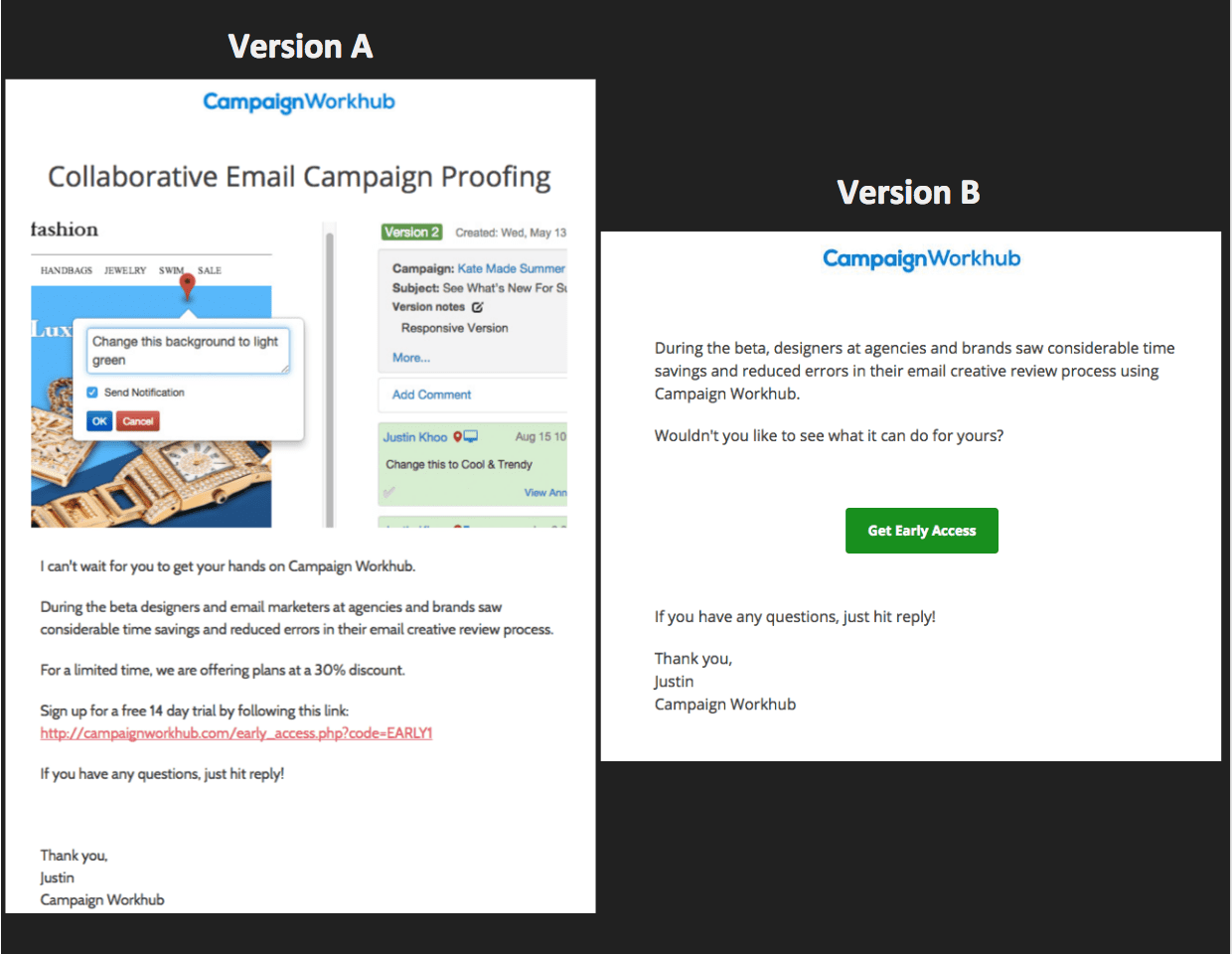

As Justin was getting ready to launch his email proofing tool, Campaign Workhub, he opted to A/B test a message to his signup list.

A) A graphic at the top of the email demonstrates a bit about what the tool does, while the copy references “early access” and a plain text CTA offers a 30% discount for a “limited time.”

B) A shorter, text-based email, with a prominent CTA button, this message focuses on beta results and early access, with no discount offer.

The results of this email reflected a 45% click-thru-rate for version B over version A. The Power Hour group discussed how interesting it was that the simpler version, without an offer, had better results but it led into a deeper discussion regarding best practices for A/B tests in general: test only one element at a time, so you can isolate the winning component.

With Campaign Workhub’s example, it’s impossible to know if the results were based on the text differences or the CTA button or a combination of both.

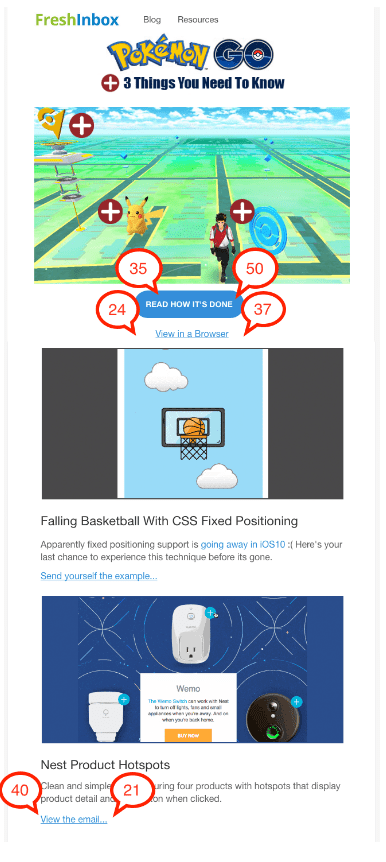

The third and final A/B test we reviewed was for a weekly newsletter digest that Justin sends to his Fresh Inbox subscribers. When Justin isn’t writing amazing content for Email on Acid or developing his own product, he maintains Fresh Inbox, a great blog covering interactive and advanced email design techniques and news.

While an A/B test can test many different email elements – from copy to graphics, headings to CTA – this A/B test was all about the subject line.

Subject line A: #EmailGeeks Digest: Interactive Email Galore

Subject line B: #EmailGeeks Digest: Interactive Pokemon GO Email

Anyone who hasn’t been living under a rock is aware that PokemonGo has been wildly popular. Justin typically pulls a topic from the newsletter to mention in the subject line and in this edition of the newsletter, there were 7 options. Featuring PokemonGo seemed like a no-brainer but Justin decided to test it anyway.

The results were actually pretty interesting, but not in the way that you’d think. The Open Rates ended up being nearly the same, despite an initial surge for the B version. And while the open rates were fairly similar, not only were the click-thru rates +23% for the PokemonGo version, but the distribution of clicks was significantly different:

Version A clicks on the left, Version B on the right

It’s clear that the article regarding Nest was more popular for the agnostic Version A subject line, but the results skewed towards PokemonGo for Version B. The takeaway from this test is to really consider the impact of the elements you’re testing, since they can not only impact open rate but could also change the way the user interacts with the email content. Digest newsletters are a little different, since the topics likely won’t all have one goal or theme, but it’s something to keep in mind when you test.

If you’re interested, you can view the full presentation.

And before we go, if you’re looking for an email platform to help you run A/B tests (or C/D/E/etc.), we can help.